最近ChatGPT很红,想说可以试着把OpenAI的API给接上Telegram的群组来玩玩看,顺便记录一下GCP的AppEngine Deploy方法!

代码我放在:https://github.com/stevenyu113228/OpenAI-GPT-3-Telegram-Chatbot

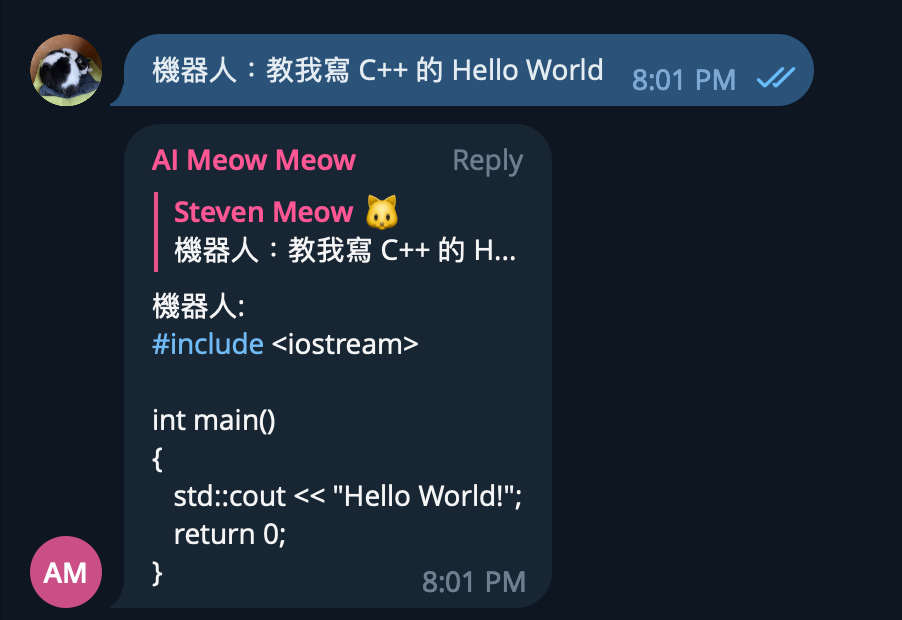

效果

OpenAI

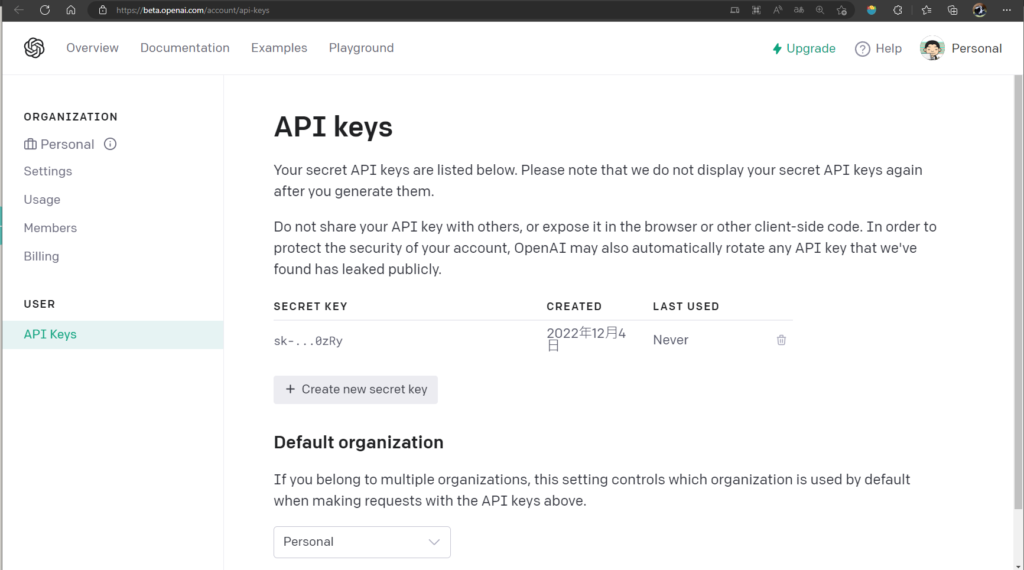

先到 https://beta.openai.com/account/api-keys

申请一组 API Token

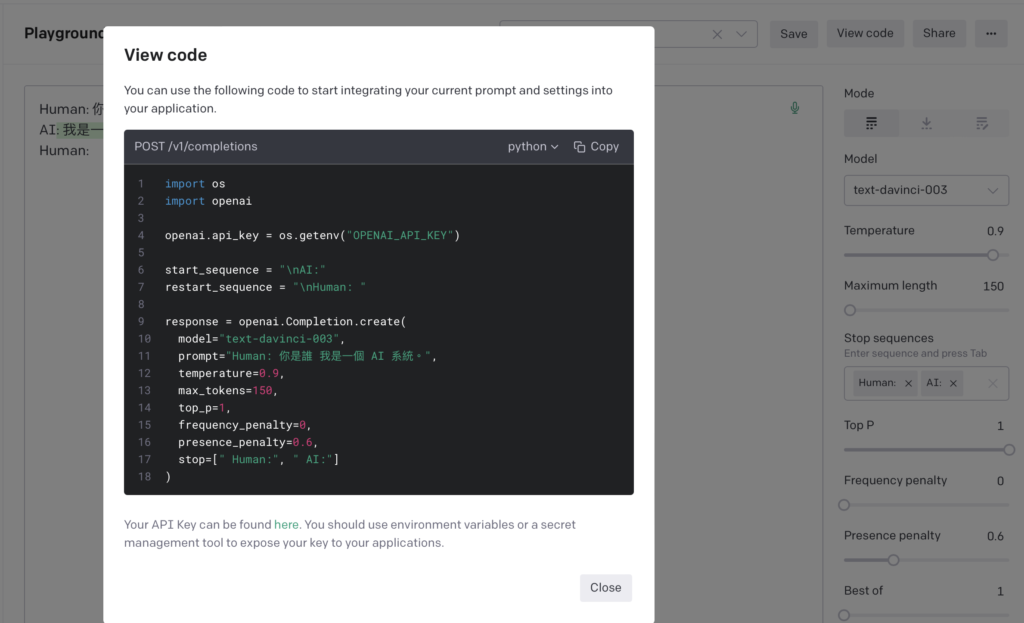

在Playground上面随意玩一下,复制他的Example来微调

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

start_sequence = "\nA:"

restart_sequence = "\n\nQ: "

response = openai.Completion.create(

model="text-davinci-003",

prompt="Q: ",

temperature=0,

max_tokens=100,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=["\n"]

)Telegram API

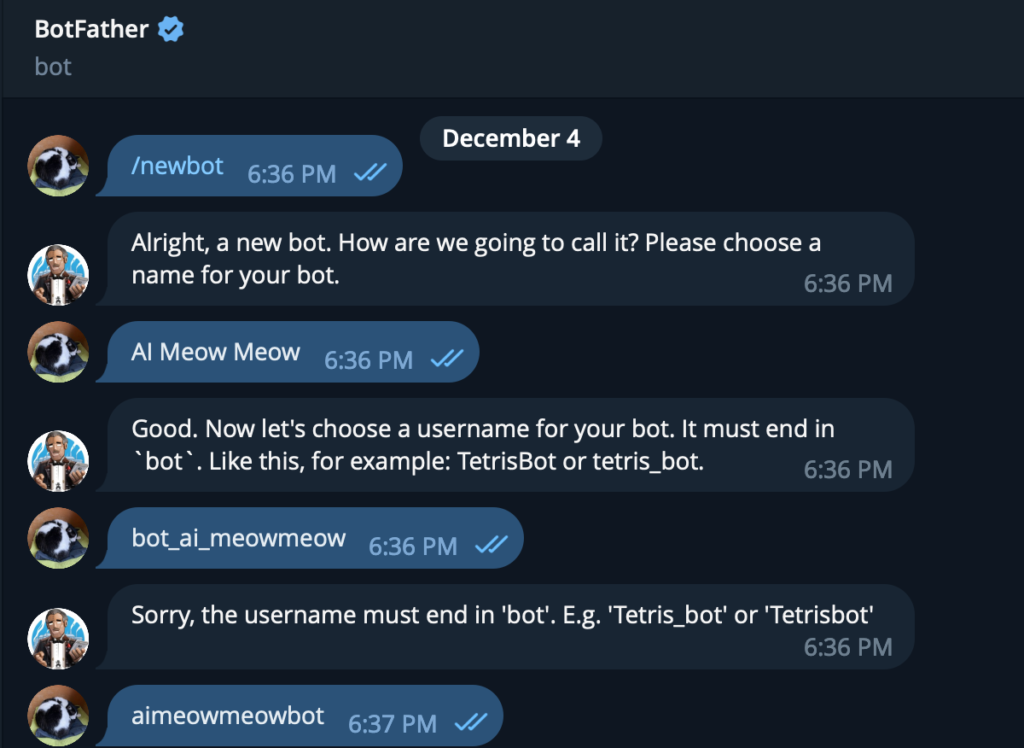

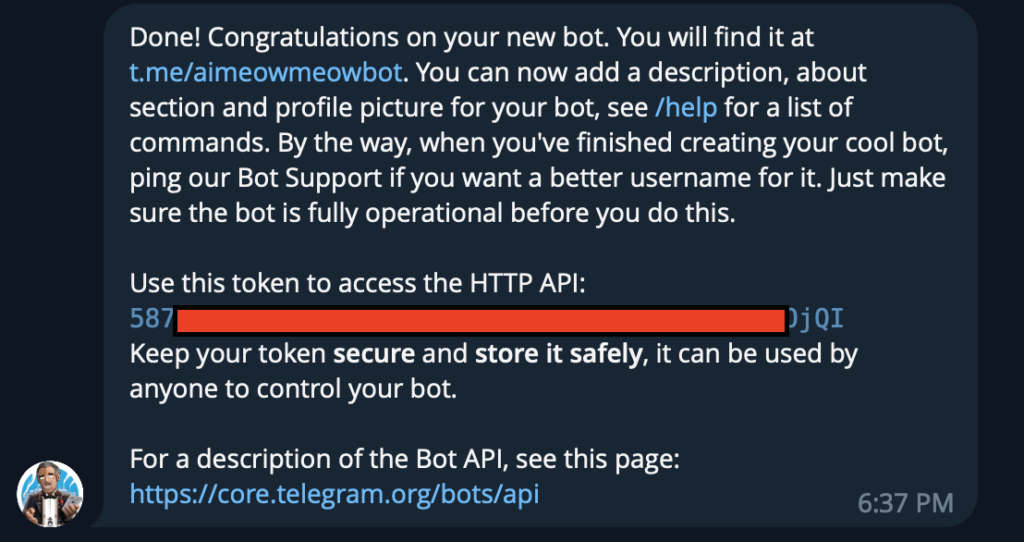

接下来到Telegram的BotFather来新增Bot

Botfather会响应一组API Token,把它抄下来

写到我们的Config(config.ini)档案里面

[Telegram]

token=xxxxx:xxxxxxxx

[OPENAI]

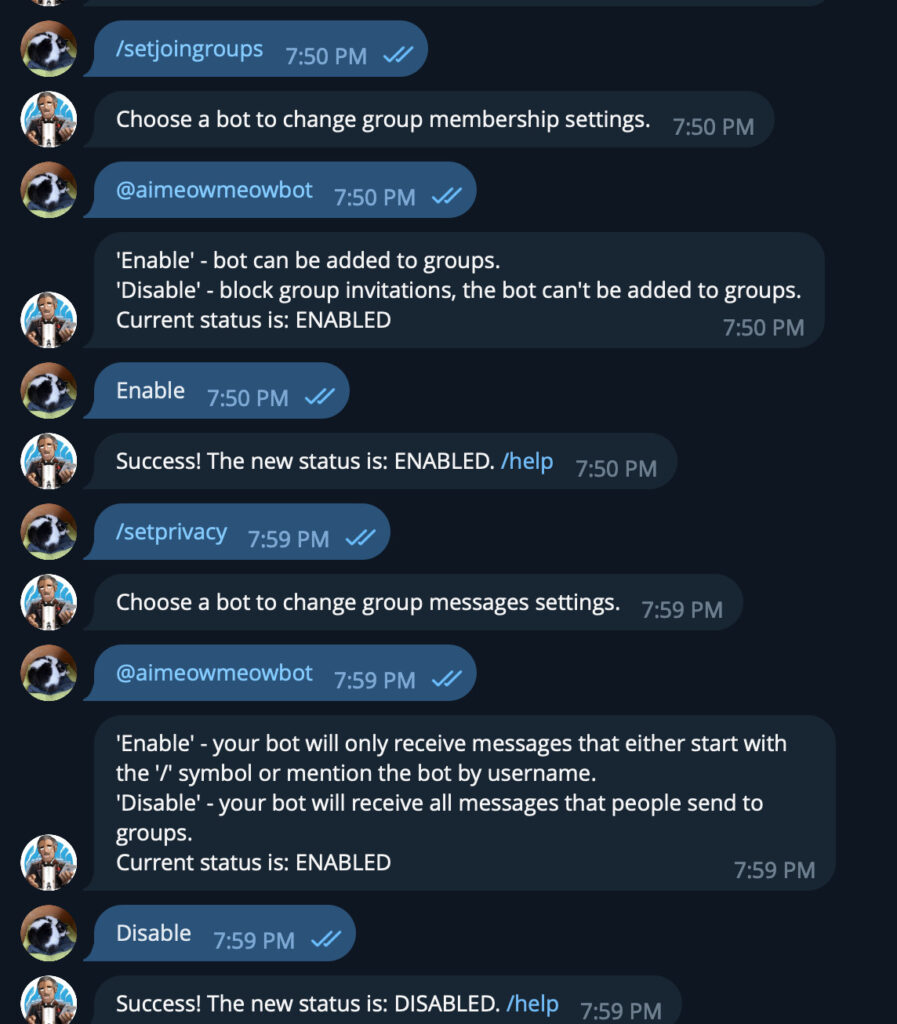

key=xxxxxxxxxxxxxxxxxxxxx接下来回到Botfather设定/setjoingroups为Enable,以及/setprivacy为Disable

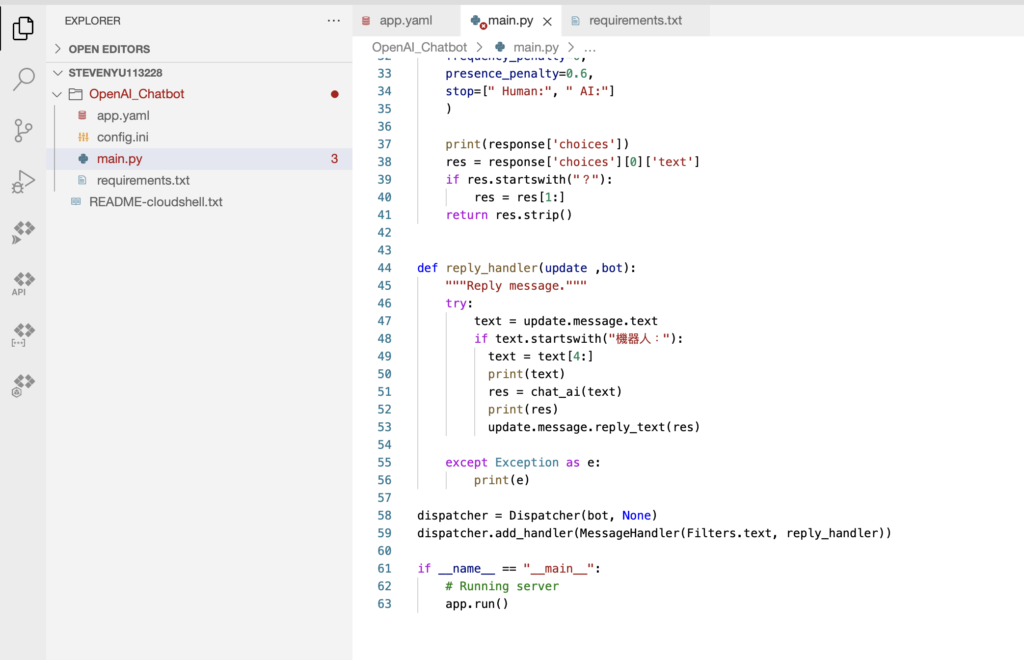

完整代码

再来增加一点细节,串上Telegram的API

import openai

from flask import Flask, request

import telegram

from telegram.ext import Dispatcher, MessageHandler, Filters

import configparser

config = configparser.ConfigParser()

config.read("config.ini")

bot = telegram.Bot(token=(config['Telegram']['token']))

app = Flask(__name__)

@app.route('/hook', methods=['POST'])

def webhook_handler():

if request.method == "POST":

update = telegram.Update.de_json(request.get_json(force=True), bot)

dispatcher.process_update(update)

return 'ok'

openai.api_key = config['OPENAI']['key']

def chat_ai(input_str):

response = openai.Completion.create(

model="text-davinci-003",

prompt=f"Human: {input_str} \n AI:",

temperature=0.9,

max_tokens=999,

top_p=1,

frequency_penalty=0,

presence_penalty=0.6,

stop=[" Human:", " AI:"]

)

print(response['choices'])

res = response['choices'][0]['text']

if res.startswith("?") or res.startswith("?"):

res = res[1:]

return res.strip()

def reply_handler(update ,bot):

"""Reply message."""

try:

text = update.message.text

if text.startswith("機器人:"):

text = text[4:]

print(text)

res = chat_ai(text)

print(res)

update.message.reply_text(res)

except Exception as e:

print(e)

dispatcher = Dispatcher(bot, None)

dispatcher.add_handler(MessageHandler(Filters.text, reply_handler))

if __name__ == "__main__":

# Running server

app.run()CloudFlare Tunnel

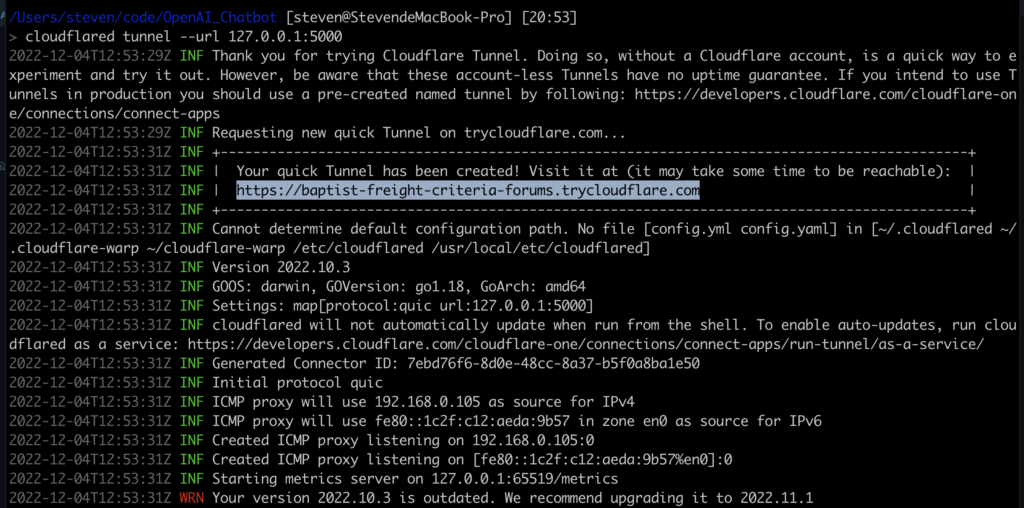

接下来透过cloudflared开启一个tunnel到127.0.0.1:5000

cloudflared tunnel --url 127.0.0.1:5000

并复制它吐回来的网址,如上图是

https://baptist-freight-criteria-forums.trycloudflare.com

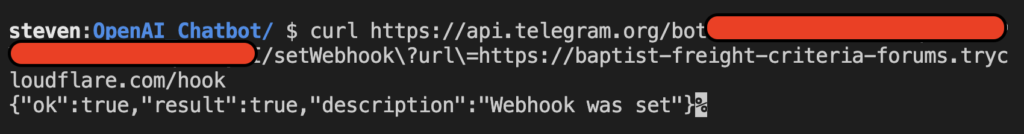

用curl或浏览器戳一次以下网址

https://api.telegram.org/bot{API_TOKEN}/setWebhook?url=https://{CLOUD_FLARE_TUNNEL}/hook

接下来使用python3 main.py跑起来即可

GCP

为了需要一个干净的环境取得requirements.txt

我使用docker

docker run -it --rm python:3.8 bash

pip3 install python-telegram-bot

pip3 install openai

pip3 install flask

pip3 freeze这样我们就能取得所有相关的lib,建立一个requirements.txt把它存取来

APScheduler==3.6.3

backports.zoneinfo==0.2.1

cachetools==4.2.2

certifi==2022.9.24

charset-normalizer==2.1.1

click==8.1.3

et-xmlfile==1.1.0

Flask==2.2.2

idna==3.4

importlib-metadata==5.1.0

itsdangerous==2.1.2

Jinja2==3.1.2

MarkupSafe==2.1.1

numpy==1.23.5

openai==0.25.0

openpyxl==3.0.10

pandas==1.5.2

pandas-stubs==1.5.2.221124

python-dateutil==2.8.2

python-telegram-bot==13.14

pytz==2022.6

pytz-deprecation-shim==0.1.0.post0

requests==2.28.1

six==1.16.0

tornado==6.1

tqdm==4.64.1

types-pytz==2022.6.0.1

typing_extensions==4.4.0

tzdata==2022.7

tzlocal==4.2

urllib3==1.26.13

Werkzeug==2.2.2

zipp==3.11.0再来建立一个app.yaml

里面输入

service: openai-chatbot

runtime: python38GCP AppEngine (PaaS)

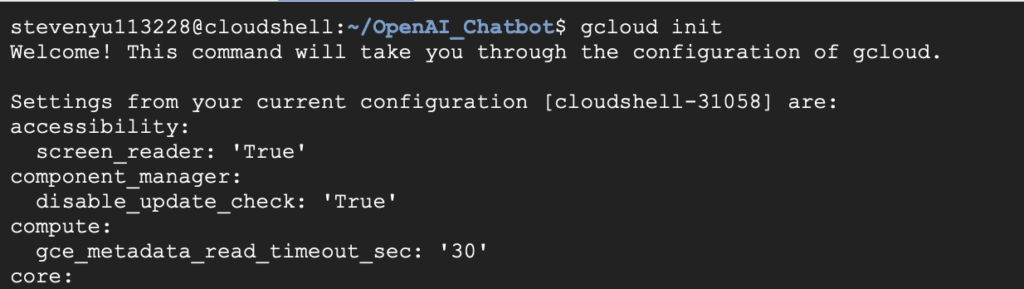

开启GCP Cloud Shell的编辑器

把所有档案都贴进来

Cd到文件夹后,输入gcloud init

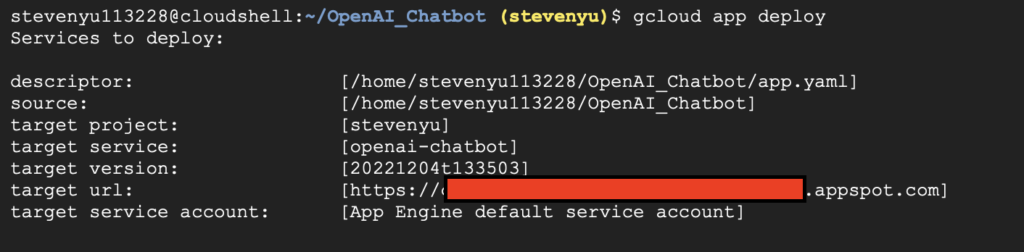

接下来输入gcloud app deploy

等待它Deploy完毕,再跟前面一样的方法设定Webhook,就能直接玩了ㄛ!

https://api.telegram.org/bot{API_TOKEN}/setWebhook?url=https://{APP Engine URL}/hook